I’ve been thinking a lot about the issue of trust in computing lately. It’s actually something I started thinking about almost 40 years ago when I had my first job developing software. At one point in our design work a couple of us started talking about what we call “threat analysis” for the software we were developing. Specifically we talked about ways the software could be compromised or manipulated to steal. We wanted to make sure the software was safe but clearly we were aware of the great power and responsibility we had as software developers.

At the same time another group in the company was working with a clothing manufacturer. During testing when the older manual system and the new computerizes system were running in parallel it became obvious that something was going wrong. The police were called and an undercover operation was set up. Not long after a number of employees were arrested for running a major inside theft ring. The old system had been compromised and only the lack of ability to manipulate the computer system allowed the thefts to be discovered.

Any system can be compromised with the right access. People need to be able to trust other people or the system breaks down. As computers become increasingly integral to our lives the ability to trust our systems and the people who design, create and operate them becomes more important. Can we trust the people who have our data?

The image above is something of an eye opener for many people. The cloud is something of a myth. What we call the cloud is just putting data and/or applications on someone else’s computer. Can we trust the people who run those computers? And if we can how far can we trust them?

Constant news stories about people breaking into corporate data systems and stealing credit card data, login data, and other personally identifying information bring home to importance of those systems being secure. For most of our personal data, email and cloud storage of files and images, we are increasingly trusting third parties, Google, Microsoft, Amazon and others, to store our data for us. It’s easy. It’s cheap. It’s easy to assume they can do it better – what ever that means. We trust them to keep the data safe from malicious agents on the internet. And we trust them, the companies, not to misuse our data. We trust them not to share it with governments without permission or court order. We trust them to not sell the data to others. In short we do a lot of trusting. Should be be trusting them so much? That is a hard question to answer and I think many of us have companies that we trust more or less than others.

The

Computer Science Curricula 2013 guidelines (PDF) include recommendations that Professional Ethics, Privacy and Civil Liberties as well as Security Policies, Laws and Computer crimes to be included in a computer science curriculum. In fact while the guidelines talk about teaching many of these issues in a standalone course the committee made a point that these issues must also be discussed in context of different courses. I think this is important. And I think it needs to start young.

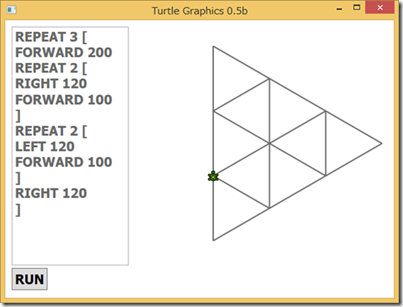

Where I teach we talk about many of these issues in our Explorations in Computer Science course. We try to work it in during our other software courses as well. I’m thinking we could do it better though. I think we all want people developing software who have solid ethics and who understand and value the need to be trustworthy. I think we need to be careful when teach teach students to help them understand that not everything that can be done should be done. We need people to consider the consequences, intended and unintended, of the work they do. Our future depends on it.

Disclosure: I was privileged to be a member of the

ACM/IEEE Joint Task Force that wrote the CS 2013 recommendations.

![code_quality[1] code_quality[1]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhX-tQmpFk7pRiMR7iqZ4QoP2A0ukQGEiqLOHkgazOFkfJWjWChz4CJt8Y_z9W4MTf17Als45cgnfD9rpBi78xSV7U4U7oUQ-29DAn4LQzNk_oXMXHX8NR149H1EmwoK9Mi7C8Ysg/rw/?imgmax=800)

![image_thumb[1] image_thumb[1]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhKhYhUg-5zPt2h10wGV0mMr8cZHKg6KlM2HZMpUpp_8FoaQXANCzhs5S5OagMZOCyuA0vX5xZE-pBgTIEAW6k23ab-V_7DiTiwyH_4k3nbP2iCHH3ZBY9Yvw_U5hpZULT5iQmDEQ/rw/?imgmax=800)